Previously in…

We wanted to detect intent signals

We wanted to do better than mere Slack notifications (i.e. find a way to dispatch signals in our CRM, include them in our scoring, analyse their impact on our conversion rates, …)

I (hopefully) convinced you that it was not only about automation, but also about storage and data structure.

Only then did i unfold my blueprint to build a complete intent signal detection system — a system that would enable all of the above.

Go back and read episode 1’s TL;DR if you’re memory’s failing you.

Now that we’re all set on theory, it’s high time we got our hands dirty.

This week, for the second and last part of House of Data, we’ll use my blueprint on 3 real-life examples, coming from a mission i partook in, a few months ago, thanks to Bulldozer.

I’ll present:

the company we helped

the tool we used (Cargo)

i’ll explain how we laid the groundwork of the database

then, show you in details how we implemented the following signals:

Website Visits

Negative Lemlist Interaction

Big Headcount Variation

and afterwards — think of it as bonuses — the less-detailed implementation of the following:

Lead Magnet Download

Ongoing Persona Hiring

Hired Persona

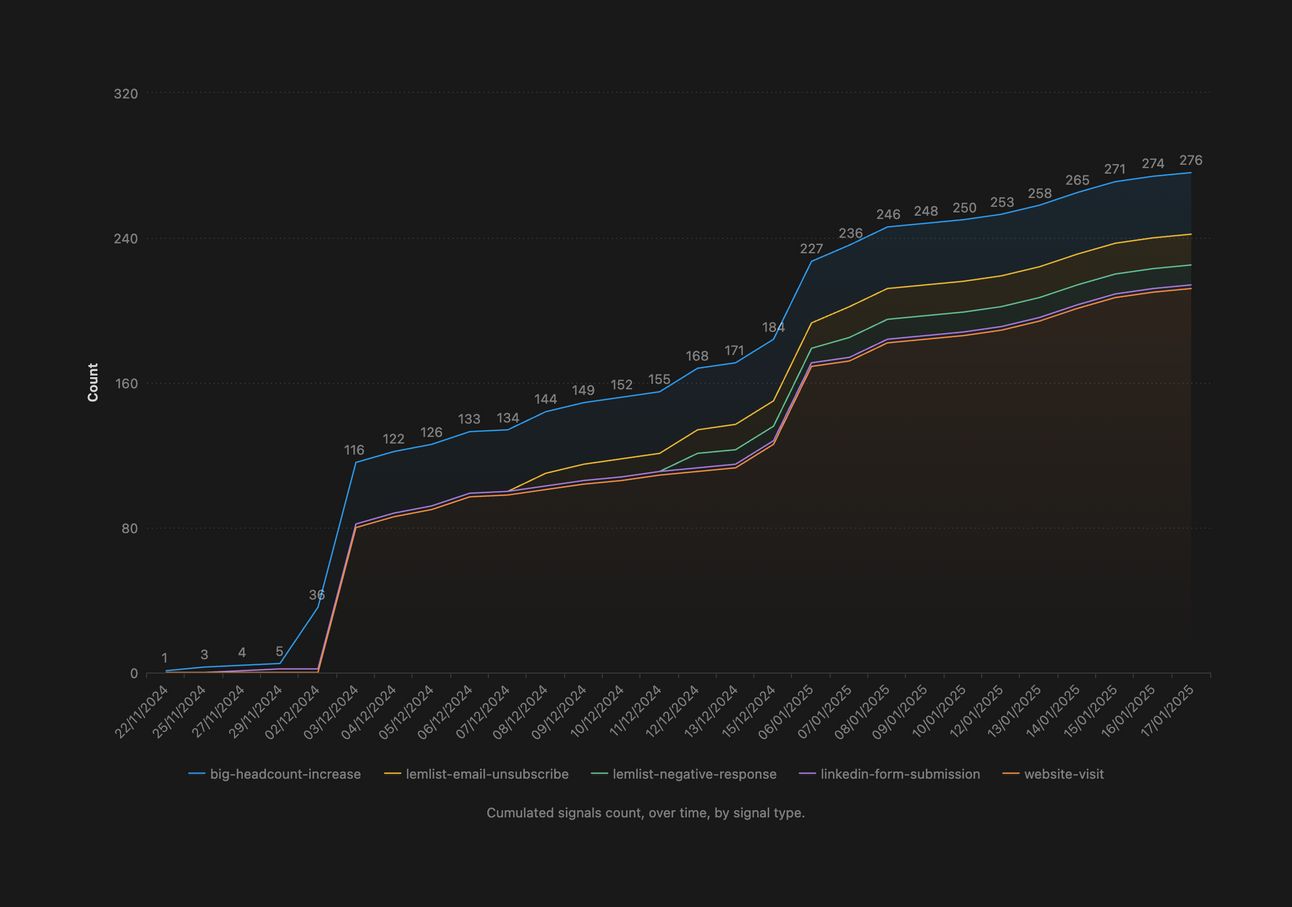

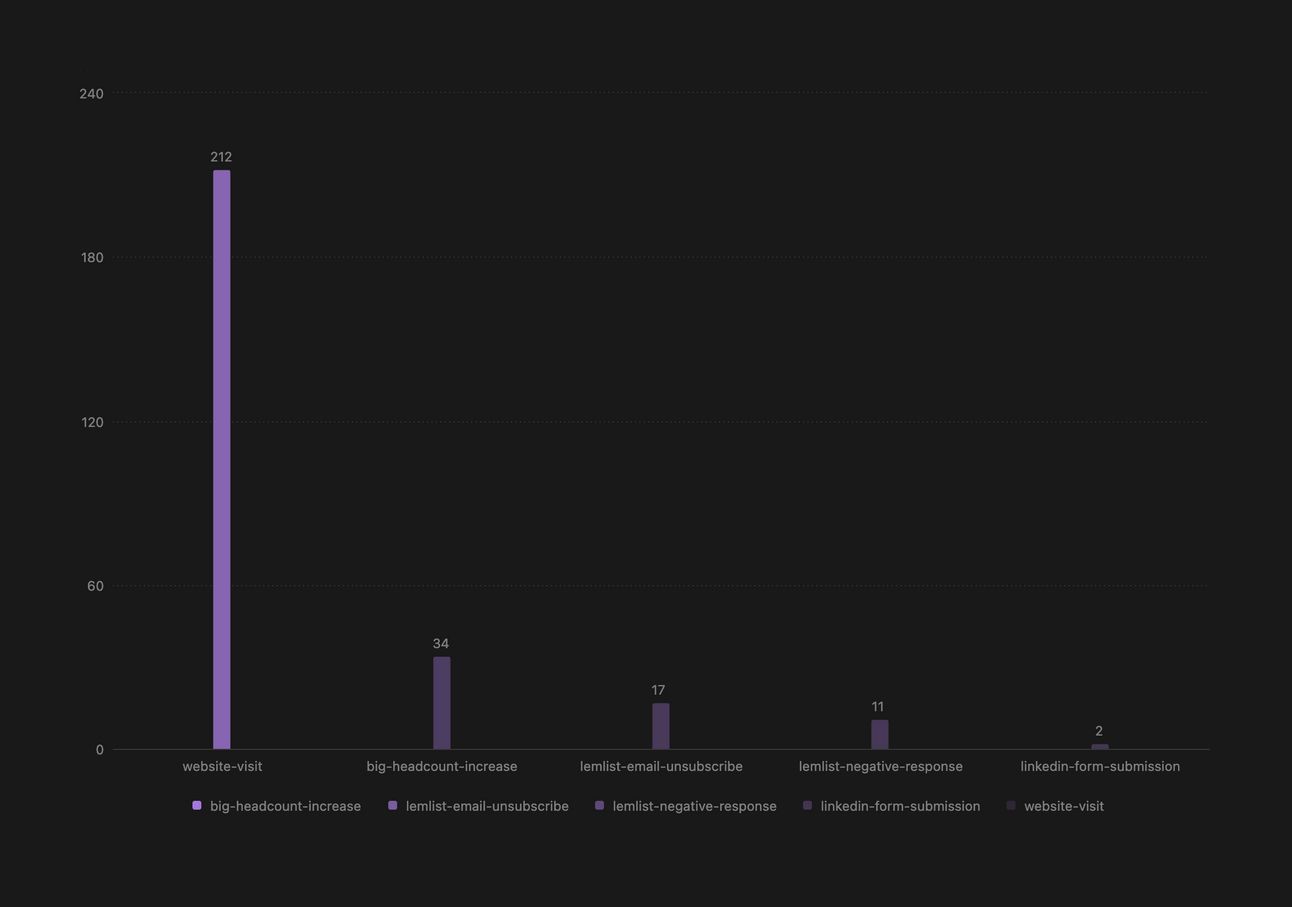

After a month of mission, this is all the signals we’d detected:

Let me show you how we got there.

Without further ado…

…a bit of context

I’ll be fast, that’s a promise 🌝

Hiretrend, our client, is a new HR Tech that helps companies predict and act on their employee engagement.

Unsurprisingly, by helping their clients maximise their employees’ experience, Hiretrend helps them reduce the replacement costs (aka costs of turnover).

To that end, their personas are the “responsables qualité de vie au travail” (french for “employee engagement managers”) that work in consulting firms (their ideal customer profile).

It was all an ABM mission on behalf of Bulldozer where i worked as a growth engineer (my specialty) whilst Benoît Pasquier worked as Head of Growth & ABM manager (his specialty).

Now, that’s where things become interesting: to fulfil our mission, we used CARGO.

If i had to come up with a headline for Cargo, i’d say it’s n8n and PostgreSQL merged together.

Now you get why i bring it up.

We said in the first episode that building an intent signal sensor was both about automation (n8n) and storage (postgresql). Hence the relevance of Cargo — the first to bring both together (even though Make timidly attempted the same thing with their “data store”).

And, although i still prefer the n8n-postgres combo, i find their product extremely promising.

So yeah, now that you know more about the context, we can finally use that blueprint.

I. Foundations: the addressable market

As soon as the mission started, Benoît built a target list of 170 companies for Hiretrend.

We obviously did not want to cold email them from the get go, but rather wait for them to pass a specific score threshold — only after they’d have emitted enough intent signals.

So, according to episode 1 in the series, you’d probably have expected us to create two tables: one for companies, another for the contacts within these companies, both linked together, and them both filled as we detect new intent signals.

Well… we did not 🌝

Let me explain:

the contact table

As you very well know, contacts are always harder (and more expensive) to find than companies. Therefore, we decided to only store and score companies in the database. We’d still be free to look for their contacts later on.

(moreover, even if a signal was emitted by a contact, we can almost always tie that contact to a company — the reverse is not true)

the addressable market’s inflation

Still according to the blueprint, the addressable market should inflate as we detect new intent signals from unknown companies.

But, similarly to 1. above, we decided to not let the addressable market grow in the first weeks of the mission.

Bottom line is: we can apply very strict constraints on the system at the beginning.

Think of it as a security for the system to never get the best of us — so that we’re never drowning in signals, contacts or companies that we don’t know yet how to handle.

That gives us room to brace our system’s foundations before we slowly scale up.

My two cents: do the same.

II. Identification

So, we had the LinkedIn Ids of the 170 aforementioned companies in Hiretrend’s addressable market. And, as you very well remember, the LinkedIn Id is the king of identifiers.

Unfortunately, we couldn’t use just that one. Once again, we use identifiers, both to prevent duplicates within our tables, and to link tables together.

But, in this instance, we were about to link a variety of signals to the companies in the database, and we weren’t 100% sure to have a LinkedIn Company Id for each and every detected signal.

Thus, we needed other company identifiers, to use depending on the available signal data.

In our case: the referenceDomain (the clean company domain name) ; the linkedinCompanySlug (everything after “https://www,linkedin.com/company/” in a LinkedIn company url) ; the lowerCompanyName (the lowercase company name).

In total, that’s four unique identifiers for the companies of the addressable market: linkedinId, referenceDomain, linkedinCompanySlug and lowerCompanyName.

I’ve just sorted them in preference order i.e. we use the linkedinId whenever possible, and the lowerCompanyName as a last resort only.

III. Iterations

Sensor

“i’ve automated a signal” 🤡

“i’ve implemented a signal” 🤡🤡

“i’ve implemented an intent” 🤡🤡🤡

We don’t implement an “intent”.

We don’t implement a signal either.

We detect them.

And, you know, we’ve been detecting signals since the dawn of science:

electromagnetic waves

acoustic waves

seismic waves

Scientists don’t “implement a seismic wave” lmao

They detect them, and they do so with…

🥁

SENSORS.

That’s the word!

Sensors!

So, without further ado, i solemnly declare that from now on:

Any system engineered to detect, store and process intent signals shall be called an Intent Signal Sensor.

The sensors i implemented

Building intent signal sensors from the ground up is far from boring ; explaining how to do it, very much 🌝

To soften the blow, i decided to keep it all in the Notion Gallery below. I guess it’s the most adequate UI for you to investigate only sensors that you find intriguing.

So, my advice is the following: pick one sensor only; understand how the blueprint came into play; bookmark the 2 episodes of house of data (the other one is here); and get back here only when you intend to implement something similar.

TL;DR

Here we are. Wrap-up. The end.

TL;DR of this second and final episode:

A sensor’s implementation is always different; the method is always the same. Therefore, learn and remember the method only:

what’s our intent signal’s data source?

what data extractor to use?

what data will i get from the extractor?

which ones can i use to build my signal’s primary key?

what company’s identifier can i use as foreign key for my signal?

what’s my signal data model?

how to implement the workflow that stores the signal in the database?

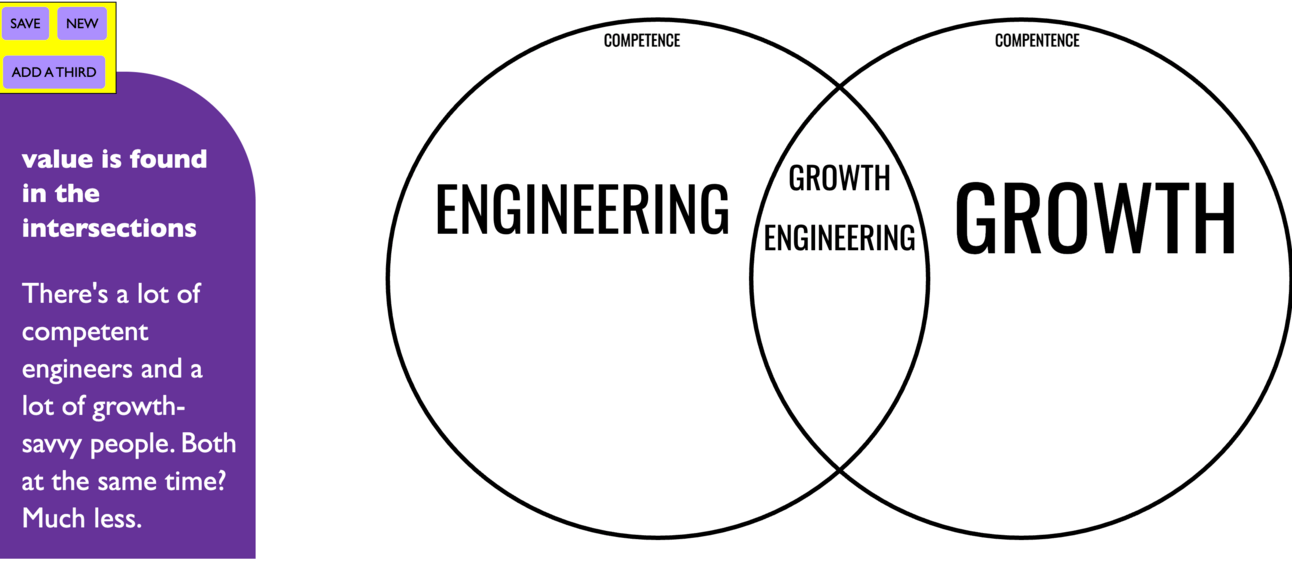

As a growth/gtm engineer, your role is not only technical:

You’re valuable because you lie at the center of that Venn diagram.

So take the time and hindsight to reflect on your technical choices.

Ponder their impact on sales.

And once you’ve circled that square, seize the opportunity to infuse your vision of growth during the implementation.

Dare i call you artists?

Maybe.

I’ll call you craftsmen for sure.

By storing intent signals in a database, spitting out charts like the GORGEOUS Notion chart below is a breeze.

Quick reminder that the purpose isn’t to just to have beautiful pictures to show, but to take informed decisions:

if we’ve detected a

xsingle signal in 3mo, maybe we should just deprecate it instead of spending time on maintenance.if leads on which we detected a signal of type

yconvert better than others, maybe we should increase their scoring weight.etc.

All this to say: don’t take the above charts and metrics for gospel, i just wanted to show how easy they were to create once i had all signals stored in a structured database.

Dopamine shop

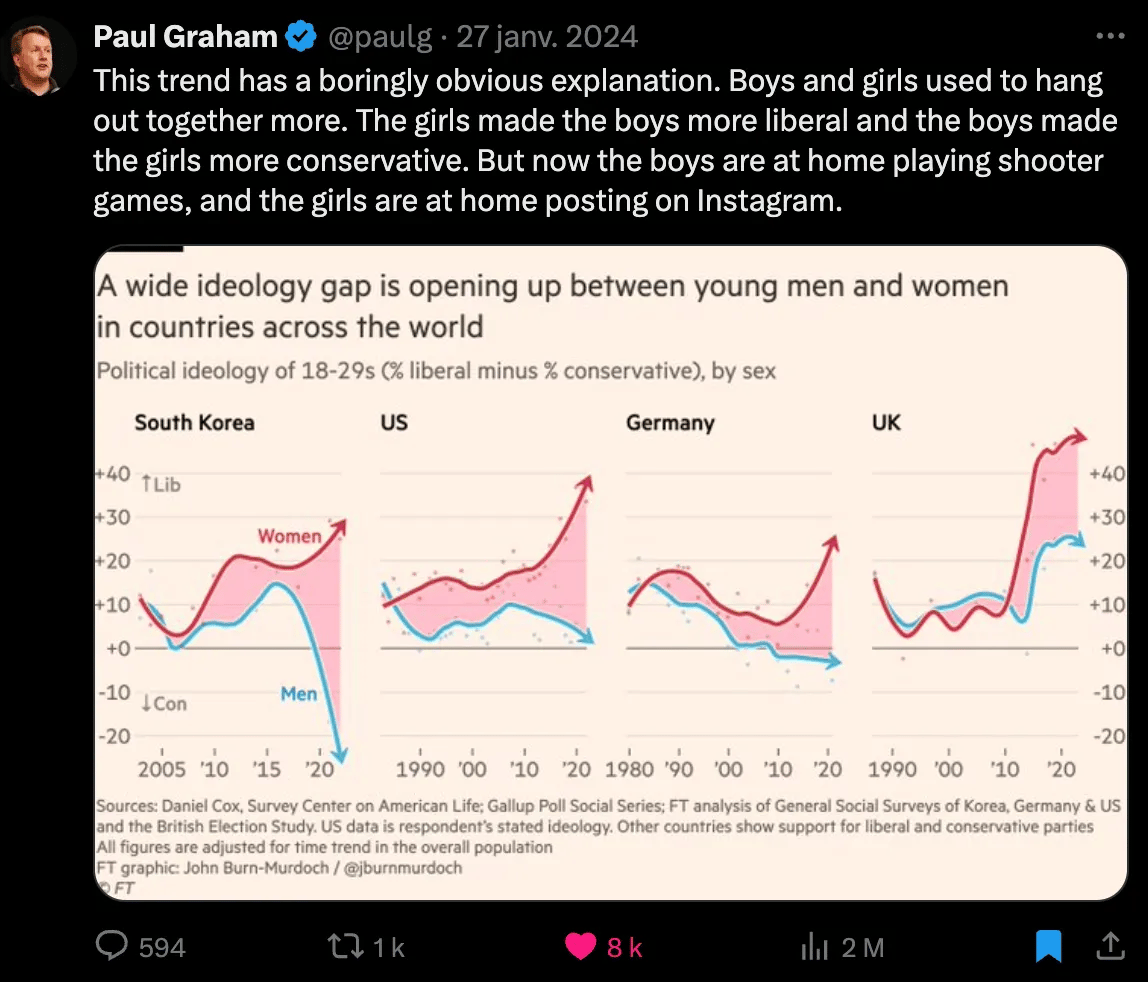

no doubt it’s the same in France (and across the western world)

The reality of dating apps → the math begin the dating apps we’re (almost) all using like how to retain men vs. women, behavioural differences between sexes, monetisation, … fascinating.

In Sydney’s airport, for eyewear

Cheers ✌

Bastien.